Cheriton School of Computer Science PhD candidate Chang Ge, Professors Ihab Ilyas and Xi He, and their colleague Professor Ashwin Machanavajjhala at Duke University have developed a system that allows data owners to regulate how much of their privacy may be breached when personal information is being analyzed.

Their novel system called APEx — short for accuracy-aware privacy engine for data exploration — also lessens the burden on data scientists who traditionally have had to compromise the accuracy of their analysis to give their clients certain privacy guarantees.

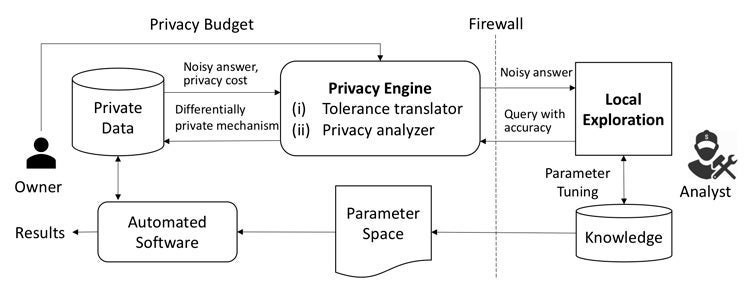

APEx translates data scientists’ queries and accuracy boundaries into appropriate mechanisms that satisfy differential privacy — a rigorous mathematical definition of privacy, which by looking at the output it cannot be determined whether any individual’s data was included in the original dataset. The mechanism then incurs the least privacy leakage possible and returns a noisy answer to the data scientist who would have specified beforehand their accuracy guarantee.

Chang Ge, a PhD student at the Cheriton School of Computer Science, is interested in big data systems, data quality, data exploration and data cleaning on private datasets.

“While general purpose differentially private query answering systems exist, they are not really meant to support interactive querying, and they fall short in two key aspects,” Chang Ge said.

“In order to achieve high accuracy, the analyst has to be familiar with the privacy literature to understand how the system adds noise and to identify if the desired results can be achieved in the first place. And somewhat ironically, these systems do not provide any guarantees to the data analyst on the quality they really care about, namely correctness of query answers.”

APEx solves these two issues by choosing the suitable differentially private mechanism with the least privacy loss that answers an input query under a specified accuracy guarantee. Data analysts can then reliably explore data while ensuring a provable guarantee of privacy to data owners.

In developing APEx, Chang Ge along with Professors Ihab Ilyas, Xi He and Ashwin Machanavajjhala conducted a comprehensive empirical evaluation on real datasets with benchmark queries and a case study on entity resolution. They found that APEx can answer a variety of queries accurately with small privacy loss, and can support data exploration for entity resolution with high accuracy under reasonable privacy settings.

The figure illustrates the workflow of APEx. The data owner and the data analyst are the two parties involved in this system. The data owner owns the private data, while the analyst is on the other side of the ‘firewall’ with no direct access to the data. A data analyst can interact with the sensitive data through the local exploration module with the goal of judiciously choosing parameters for automatic software, and the privacy engine answers these queries and tracks the privacy loss.

“This system could help prevent future data breaches if policymakers were to pass legislation that would require APEx to be implemented by companies,” Chang Ge said.

“The policymaker will determine the privacy budget for a particular dataset. Once this is determined you can just leave the rest to APEx and customers could, in turn, be more confident that their data is protected.”

The paper detailing the new system titled “APEx: Accuracy-Aware Differentially Private Data Exploration,” authored by Chang Ge, Ihab Ilyas and Xi He from the University of Waterloo’s Cheriton School of Computer Science and Professor Ashwin Machanavajjhala from Duke University, is slated to be presented at the 2019 ACM SIGMOD conference in June.