Recent master’s graduate Yelizaveta Brus, former postdoctoral researcher Rungroj Maipradit, Professor Earl T. Barr of University College London, and Professor Shane McIntosh of the Cheriton School of Computer Science have won an ACM SIGSOFT Distinguished Paper Award at ASE 2025, the 40th IEEE/ACM International Conference on Automated Software Engineering.

Their paper, Rechecking Recheck Requests in Continuous Integration: An Empirical Study of OpenStack, examined a common and costly issue in modern software development, namely, how developers should respond when a continuous integration system reports a failure that may not be caused by the code.

“Congratulations to Yelizaveta, Rungroj, Shane and their colleague Earl,” said Raouf Boutaba, University Professor and Director of the Cheriton School of Computer Science. “This important empirical study of recheck requests has the potential to save substantial amounts of developer time as well as compute resources.”

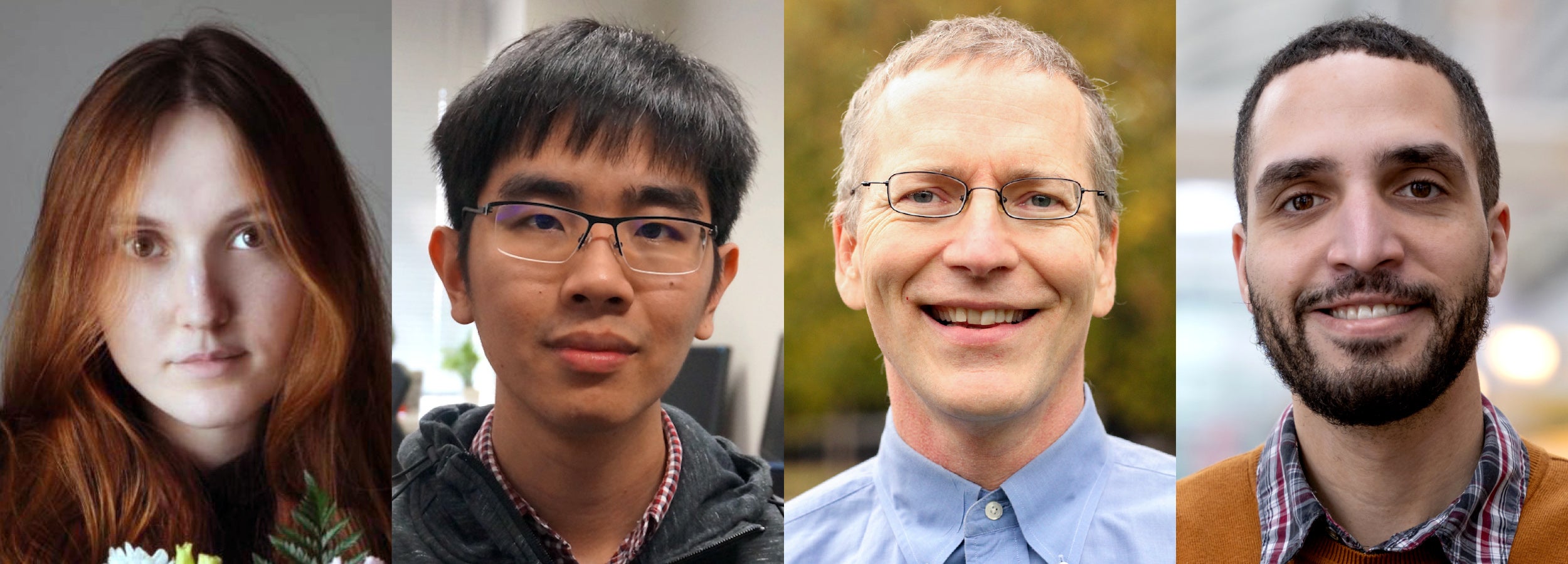

L to R: Yelizaveta Brus, Rungroj Maipradit, Earl T. Barr, Shane McIntosh

Yelizaveta Brus is a software engineer a Scotiabank. The award-winning paper is based on her master’s research, conducted at the Cheriton School of Computer Science under the supervision of Professor McIntosh.

Dr. Rungroj Maipradit is Team Lead, NLP, at AI Gen In Thailand. Previously, he was a postdoctoral researcher in Professor McIntosh’s group.

Earl T. Barr is a Professor of Software Engineering at University College London, where he leads the System Software Engineering Group and is a member of the Centre for Research on Evolution, Search and Testing.

Professor Shane McIntosh leads the Software Repository Excavation and Build Engineering Labs — aka, the Software REBELs — at the Cheriton School of Computer Science. His research group conducts empirical studies that mine the historical data generated during development of large-scale software systems.

More about this award-winning research

Continuous integration systems automatically test patch sets for errors, providing developers with timely feedback on code changes as well as helping them find mistakes before changes are merged into a shared repository. Sometimes, however, continuous integration tests fail for reasons unrelated to the developer’s patch. Infrastructure failures, service outages and non-deterministic, flaky build behaviour can trigger a fail. In OpenStack alone, a free and open-source cloud computing platform, an estimated 187.4 compute years have been wasted on rechecks that failed repeatedly. Improving the efficiency of continuous integration systems by reducing wasteful rechecks is essential for better resource use, lower operational costs and improved developer productivity.

In their paper, the research team characterized recheck outcomes by fitting and analyzing statistical models that distinguish between successful and failing rechecks. They examined historical continuous integration data to identify patterns that differentiate successful rechecks from failed ones. Through an empirical study of nearly 315,000 recheck requests spanning 10 years from OpenStack, the research team found that their model could differentiate successful and failed rechecks well, outperforming baseline approaches by 23.6 percentage points.

Further analysis suggests that, if used to automatically skip rechecks predicted to fail, the model could prevent nearly 87 per cent of wasted rechecks. For OpenStack alone, this could save some 262 years of compute time. And if the model were used to automatically trigger rechecks that are predicted to succeed, it could save an estimated 247 years of developer time that otherwise might have been spent waiting, debugging or manually retrying builds. The authors also find that the model’s predictions remain stable whether they used recent data or longer-term historical data, suggesting it could be reliably deployed in production continuous integration systems.

Directions for future work

During analysis of recheck requests, the researchers found that comments reveal insights into developer thoughts and emotions during the recheck process. An area for future research is sentiment analysis of such comments, which could offer insights into human factors influencing recheck outcomes.

Another area for investigation is developer perception of automated recheck prediction. Given the strong predictive performance of their model, it is important to study how best to integrate it into existing continuous integration workflows. One approach would be full automation, allowing the model to decide whether a failed build should be rechecked without developer intervention. Alternatively, the model could act as a decision-support tool, offering recommendations and explanations when a developer initiates a recheck, balancing automation with human oversight.

To learn more about the award-winning research on which this article is based, please see Yelizaveta Brus, Rungroj Maipradit, Earl T. Barr, Shane McIntosh. Rechecking Recheck Requests in Continuous Integration: An Empirical Study of OpenStack. Proceedings of the International Conference on Automated Software Engineering, 2025.