Anudeep Das, a graduate student at the Cheriton School of Computer Science, has received a $58,100 USD grant from Open Philanthropy to support his research on security of large language models. His project focuses on developing stealthy and resilient backdoors in LLMs, an emerging area of research as these models become more widely used.

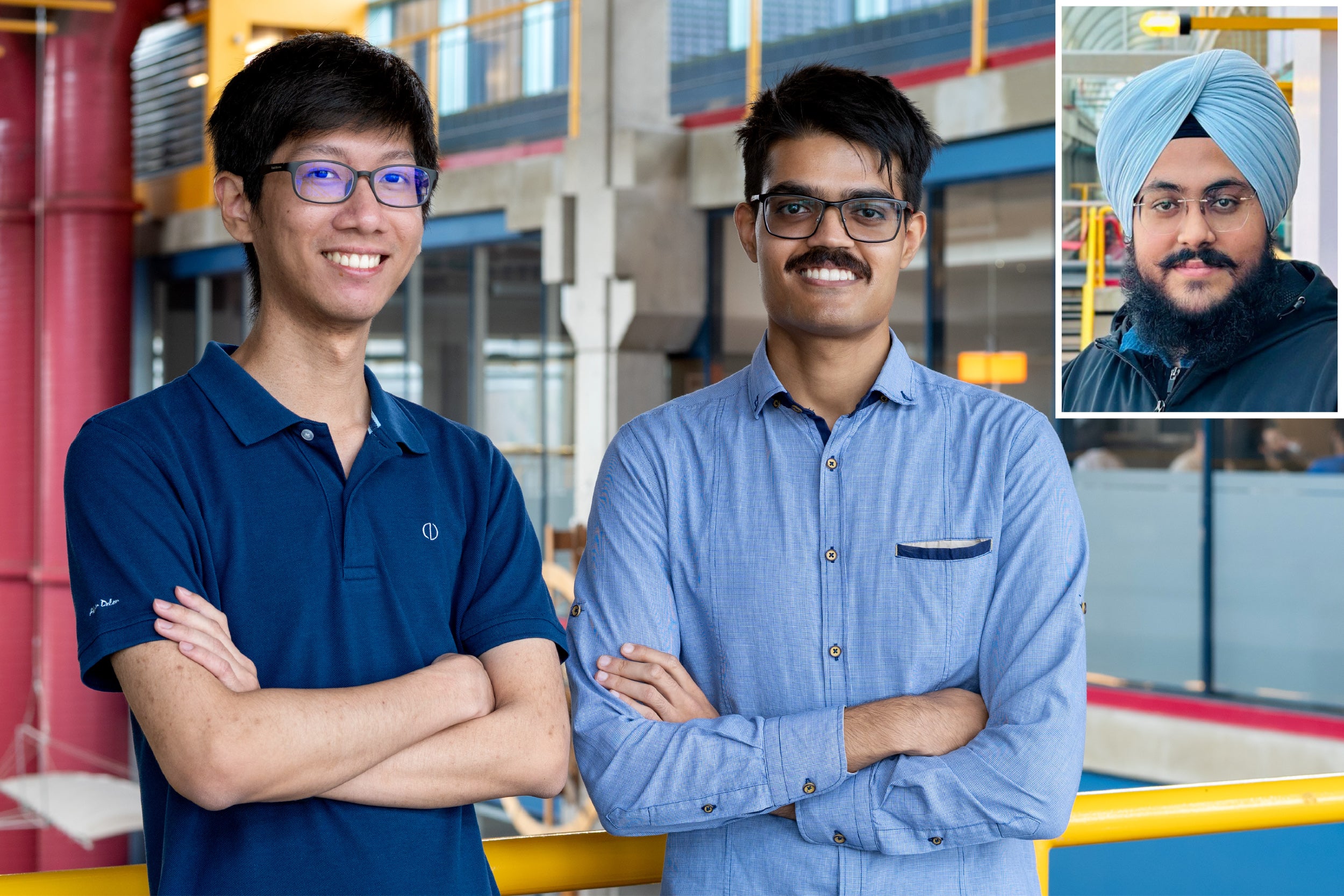

Anudeep is collaborating with master’s students, Prach Chantasantitam and Gurjot Singh. Together, they will explore how attackers could exploit the open-ended, long-form nature of LLM outputs and the frequent updates these models undergo to create backdoors that are difficult to detect, remove and defend against.

Their work investigates how white-box attackers — adversaries with complete access to a model’s architecture and parameters — can fine-tune an LLM so that malicious outputs are triggered by the model’s own previously generated text. This technique makes it challenging to detect and eliminate these backdoors.

As LLMs are continually updated and retrained, attackers could reinsert or adapt backdoors after they are removed, a threat that remains largely unexplored in current research. By studying attack-and-defence strategies, Anudeep and his collaborators aim to improve the understanding of LLM vulnerabilities and develop more effective defence strategies.

L to R: Prach Chantasantitam, Anudeep Das, Gurjot Singh (inset)

Anudeep Das is a PhD student advised by Professors N. Asokan (Executive Director, CPI) and Florian Kerschbaum (inaugural Executive Director, CPI). His research focuses on analyzing and improving the security, privacy and fairness of generative AI systems. His work has explored securing image-generative diffusion models and, more recently, on LLM safety, the focus of this Open Philanthropy–funded project.

Prach Chantasantitam, advised by Professor N. Asokan, has a strong background in security through his work with FIPS 140-3 compliance testing. His research investigates how confidential computing technologies can be used to ensure regulatory compliance in modern machine learning systems.

Gurjot Singh, advised by Professor Diogo Barradas (Associate Director, CPI), focuses on leveraging AI to address challenges in security and privacy. He works on synthetic packet generation for censorship circumvention through covert channels, securing generative AI models, and has explored correlation attacks in anonymized 5G networks employing programmable switches.

Understanding the context: LLMs and backdoor attacks

Large language models have transformed natural language processing, powering a wide range of applications from machine translation and text summarization to conversational agents like chatbots. But as these models become more widely used, they are increasingly vulnerable to security risks, with backdoor attacks among the most concerning.

A backdoor attack is a type of data poisoning attack in which an adversary intentionally manipulates the model during training or fine-tuning to insert hidden behaviours that remain latent until triggered by specific inputs. A word or phrase, for example, might prompt the LLM to generate harmful, misleading or malicious content.

In their project, the team will use a white-box threat model — the strongest of adversaries — with the aim to improve the security of LLMs. Their research will address some of the most challenging and realistic attack scenarios.

Because generative models can output arbitrary tokens, attackers have much greater flexibility in where they insert triggers and in defining the target behaviours they want the model to show. Anudeep and his collaborators aim to show that even when a defence successfully removes a backdoor, an adversary can still impose significant costs on the defender by causing the removal to jeopardize the model’s performance, undermining the practical feasibility of sustained protection.